How to Choose the Right DeepSeek-R1 Model Version

- Support

- Software Tutorials

- Kate

3450

- 2025-06-27 11:58:57

DeepSeek is one of the most popular AI tools right now, and many people are starting to use it. However, you may feel it doesn’t work as well as they expected. In most cases, that’s because youpicked a model that doesn’t match your needs or computer setup. Choosing the right DeepSeek-R1 model version can make a big difference in how well it performs. This article will help you understand the different model options and find the one that works best for you.

Part 1. Overview of DeepSeek-R1 Model Versions

DeepSeek-R1 models are named according to their parameter size, measured in billions (B). Larger models have more parameters, which generally means better performance but higher resource demands.

Here are the common versions:

1. R1-1.5B – A lightweight model with a small size and low resource requirements. Best for basic tasks like short text generation or simple Q&A.

2. R1-7B – A balanced model that offers solid performance with moderate hardware demands. Suitable for tasks such as content writing, spreadsheets, or general analysis.

3. R1-8B – Slightly more powerful than the 7B version. Ideal for precision-focused tasks like code generation and logic-based queries.

4. R1-14B – A high-performance model designed for more complex tasks, including long-form writing, math reasoning, and data processing.

5. R1-32B – A professional-grade model with strong performance. Great for large-scale tasks such as language modeling, AI training, or financial forecasting.

6. R1-70B – A top-tier model for high-demand applications. Perfect for advanced AI use cases like multimodal data processing. Requires powerful CPUs and GPUs—ideal for research institutions or well-funded teams.

7. R1-671B (Full Power Version) – The most advanced model in the lineup. Designed for national-level or ultra-large AI research, such as climate modeling, genomics, and AGI exploration.

Part 2. Differences Between DeepSeek-R1 Model Versions

Each DeepSeek-R1 model version serves different use cases based on several core factors. Below are five common aspects to help you understand how they differ:

1. Model Size and Performance

R1-671B: As the largest version, this model offers superior accuracy across a wide range of tasks, including mathematical reasoning, complex logic, and long-form content generation. It’s designed for precision at scale.

R1-1.5B to R1-70B: Accuracy improves progressively with model size. While smaller models like 1.5B, 7B, or 8B perform well on basic tasks, they may struggle with rare or complex queries.

2. Task Complexity

R1-1.5B to R1-14B: Well-suited for simple tasks such as summarization, basic dialogue, and straightforward content creation. However, they may lack deeper reasoning capabilities.

R1-32B to R1-671B: Better equipped for high-complexity tasks like multi-step reasoning, in-depth analysis, multi-turn conversations, and coding. These models excel in understanding context and producing coherent long-form outputs.

3. Resource Requirements and Cost

R1-671B: Requires significant computational resources, including dozens of high-end GPUs and massive datasets for training. The cost and infrastructure demands are very high.

R1-1.5B to R1-70B: More accessible in terms of compute and budget. These models can be trained and fine-tuned with fewer GPUs and less data, making them suitable for startups and small-scale projects.

4. Reasoning and Deployment

R1-1.5B to R1-7B: These lightweight models can run on consumer-grade GPUs (like RTX 3060) or even edge devices, with memory usage in the range of 3–15GB. Fast to load and easy to deploy.

R1-70B and above: Require enterprise-level GPUs (such as NVIDIA A100/H100) or distributed environments. These models often exceed 100GB of memory for inference, making quantization (e.g., FP16, INT8) essential for deployment.

5. Recommended Use Cases

R1-671B: Best for mission-critical applications where accuracy and performance are non-negotiable—ideal for national-scale AI research, complex simulations (e.g., genomics or climate modeling), or advanced enterprise analytics.

R1-1.5B to R1-7B: Great for low-latency environments and basic needs, such as mobile assistants, simple text generation, or small automation tools where speed and efficiency are key.

R1-8B to R1-14B: A solid choice for SMBs needing a balance between performance and resource requirements—for instance, customer support bots or content automation.

R1-32B to R1-70B: Designed for more demanding applications such as professional QA systems, specialized industry tasks, and mid-scale content platforms requiring strong language understanding.

Part 3. Recommendations for Choosing a Model Version

The DeepSeek-R1 series has many versions to fit different needs. They are good at reasoning, handling long contexts, and predicting multiple tokens. But they also have some drawbacks like occasional errors, security risks, and being sensitive to how you ask questions. So, it’s important to pick a model that matches what you need.

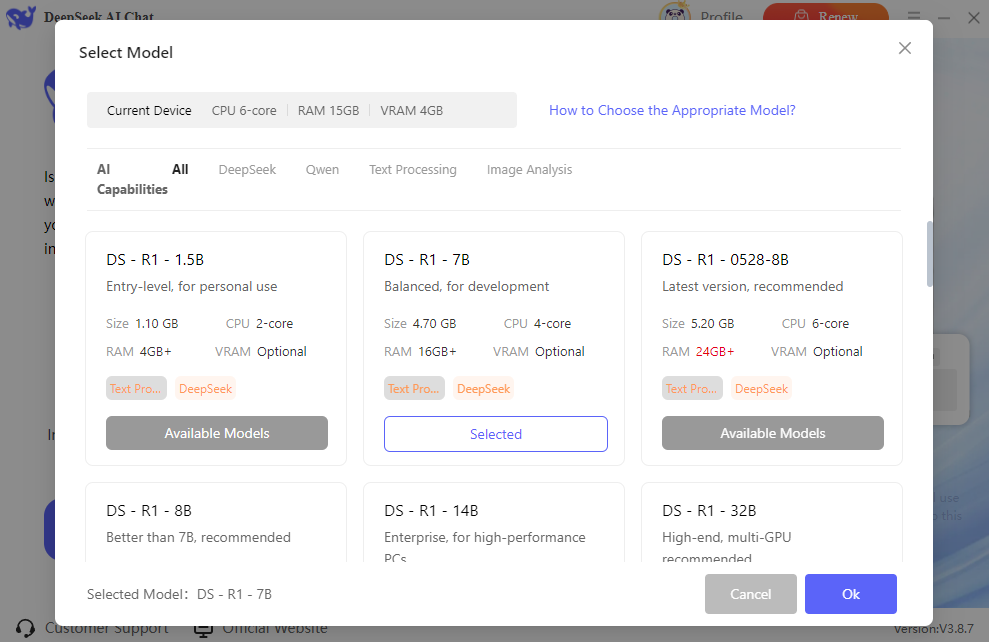

DeepSeek AI Chat offers 1.5B, 7B, 8B, 14B, 32B, 70B, and 671B models. You can choose the one that works best for you. The software will also suggest the best model based on your computer’s specs to make sure it runs smoothly.